Prof. Douglas Thain at Notre Dame

Blog: Why Makeflow Works for New Users

01 Feb 2012 - Douglas Thain

In past

articles

, I have introduced

Makeflow

, which is a large scale workflow engine that we have created at Notre Dame.

Of course, Makeflow is certainly not the first or only workflow engine out there. But, Makeflow does have several unique properties that make it an interesting platform for bringing new people into the world of distributed computing. And, it is the right level of abstraction that allows us to address some fundamental computer science problems that result.

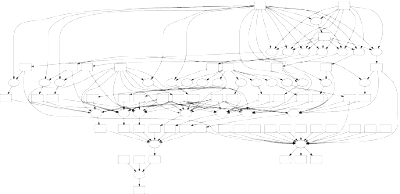

Briefly, Makeflow is a tool that lets the user express a large number of tasks by writing them down as a conventional makefile. You can see an example on our web page. A Makeflow can be just a few rules long, or it can consist of hundreds to thousands of tasks, like this EST pipeline workflow:

Of course, Makeflow is certainly not the first or only workflow engine out there. But, Makeflow does have several unique properties that make it an interesting platform for bringing new people into the world of distributed computing. And, it is the right level of abstraction that allows us to address some fundamental computer science problems that result.

Briefly, Makeflow is a tool that lets the user express a large number of tasks by writing them down as a conventional makefile. You can see an example on our web page. A Makeflow can be just a few rules long, or it can consist of hundreds to thousands of tasks, like this EST pipeline workflow:

Once the workflow is written down, you can then run Makeflow in several different ways. You can run it entirely on your workstation, using multiple cores. You can ask Makeflow to send the jobs to your local

Condor

pool, PBS or SGE cluster, or other batch system. Or, you can start the (included)

Work Queue

system on a few machines that you happen to have handy, and Makeflow will run the jobs there.

Once the workflow is written down, you can then run Makeflow in several different ways. You can run it entirely on your workstation, using multiple cores. You can ask Makeflow to send the jobs to your local

Condor

pool, PBS or SGE cluster, or other batch system. Or, you can start the (included)

Work Queue

system on a few machines that you happen to have handy, and Makeflow will run the jobs there.

Over the last few years, we have had very good experience getting new users to adopt Makeflow, ranging from highly sophisticated computational scientists all the way to college sophomores learning the first principles of distributed computing. There are a couple of reasons why this is so:

- A simple and familiar language. Makefiles are already a well known and widely used way of expressing dependency and concurrency, so it is easy to explain. Unlike more elaborate languages, it is brief and easy to read and write by hand. A text-based language can be versioned and tracked by any existing source control method.

- A neutral interface and a portable implementation. Nothing in a Makeflow references any particular batch system or distributed computing technology, so existing workflows can be easily moved between computing systems. If you I use Condor and you use SGE, there is nothing to prevent my workflow from running on your system.

- The data needs are explicit. A subtle but significant difference between Make and Makeflow is that Makeflow treats your statement of file dependencies very seriously. That is, you must state exactly which files (or directories) that your computation depends upon. This is slightly inconvenient at first, but vastly improves the ability of Makeflow to create the right execution environment, verify a correct execution, and manage storage and network resources appropriately.

- An easy on-ramp to large resources. We have gone to great lengths to make it absolutely trivial to run Makeflow on a single machine with no distributed infrastructure. Using the same framework, you can move to harnessing a few machines in your lab (with Work Queue) and then progressively scale up to enormous scale using clusters, clouds, and grids. We have users running on 5 cores, 5000 cores, and everything in between.

Of course, our objective is not simply to build software. Makeflow is a starting point for engaging our research collaborators, which allows us to explore some hard computer science problems related to workflows. In the next article, I will discuss some of those challenges.

« Prev: The Virtualization Theorem Ignored for Three Decades

Next: Some Open Computer Science Problems in Workflow Systems »